As developers, it is crucial to ensure the optimal performance of our APIs. Slow database performance and lags can have severe consequences on our applications. However, performance is not the only reason for implementing API limiting. It can also act as a crucial safety measure against Denial of Service (DDoS) attacks that can overload a server with unlimited API requests.

However, the unrestrained use of APIs can lead to grave risks, such as overwhelming servers, compromising sensitive data, and even causing service downtime. To counter such issues, API limiting has emerged as a critical measure that controls and manages API usage, promoting the reliability and security of APIs.

In this article, we will explore the meaning, importance, types, use-case and application of API limiting in a simple API. By the end of this article, you will understand how to rate limit your APIs, thereby enhancing scalability while mitigating risks.

What is API Limiting?

API limiting is the process of controlling the rate and frequency at which users can access and use APIs. It is achieved by setting specific thresholds, such as the number of requests per minute or hour, and limiting users' access to the API when they exceed those thresholds. By enforcing API limits, organizations can prevent API abuse and overload, improve service reliability, and enhance security.

Think of it this way, offering full access to your API is the same as granting unrestricted access to your platform. This can lead to negative consequences, such as users overusing the API and consuming excessive resources, which can diminish its value. While it is great people want to use your API and find it useful, an open-door policy can negatively impact your business's success and hinder scalability. It is essential to recognize the need for rate limiting as a critical component in managing API usage, promoting value, and achieving business objectives.

Types of API Limiting

Given your newfound understanding of API limit and its significance, let's look at the various types kinds of API limiting techniques, they include:

IP-based limiting: This method limits API access based on the user's IP address. It restricts API access from a specific IP address, regardless of how many requests come from that address. The pros of IP-based limiting include its simplicity, and it is ease of implementation. However, it has a significant drawback in that it can be bypassed by using proxies or VPNs, which can make it ineffective in preventing malicious attacks. Services such as CloudFlare make use of IP-based limiting to mitigate DDoS attacks. It blocks traffic coming from blacklisted IP addresses to ensure the network's availability.

Token-based limiting: This method involves assigning a unique token to each user, which is used to track and limit API usage. Tokens are issued for a specific period, and users need to renew them after expiry. The advantage of token-based limiting is that it is more secure than IP-based limiting, and users can only access the API if they have a valid token. However, token management can be challenging, especially for large-scale applications. Services such as Twilio, make use of token-based limiting to secure its API. It issues unique API keys to its users, which are used to authenticate API requests and limit usage based on the user's plan.

Quota-based limiting: This method limits API usage based on the number of requests allowed within a specific time frame, such as per hour or day. It is a flexible method, and it enables developers to customize their API usage limits based on individual user requirements. The downside is that it can be challenging to determine the correct quota limit for each user, and it may require trial and error to find the correct balance. Services such as Google Maps API, make use of quota-based limiting to limit API usage. It allows developers to set a daily limit on API usage, which can be customized based on the specific application's requirements.

Hybrid-based limiting: This method combines two or more limiting methods, such as IP, token-based and quota-based limiting. The benefit of hybrid-based limiting is that it leverages the strengths of each limiting method, creating a more comprehensive and robust API limiting strategy. Service such as Stripe makes use of a combination of token and quota-based limiting to secure their API. It assigns unique API keys to its users and limits API usage based on the user's plan and API request type.

When to use API-Limiting

Implementing rate limiting is one of the most common ways to ensure efficient API performance. When an API is used excessively, it can slow down the database and cause lags, ultimately resulting in a poor user experience. By implementing rate limiting, developers can ensure that API requests are processed efficiently and prevent the overloading of the server.

Another important use case for API limiting is to protect the API from malicious attacks, such as DDoS attacks, which can flood the server with unlimited requests. By implementing rate limiting, developers can limit the number of requests that a user can make within a specific timeframe, thereby preventing the server from being overwhelmed.

API limiting can also be used to protect sensitive data and prevent unauthorized access. By controlling and monitoring API usage, developers can ensure that only authorized users have access to sensitive data.

Furthermore, API limiting can be used to manage and control costs associated with API usage. By limiting the number of requests a user can make, developers can prevent excessive usage, which can result in increased costs.

Best Practice for API Limiting

Determine appropriate limits

One of the most crucial aspects of implementing API limiting is determining appropriate limits for your API usage. The goal is to find a balance between providing enough access for users while still maintaining control and preventing abuse.

To determine appropriate limits, you should consider the following factors:

Business Needs: Your API usage limits should align with your business needs and goals. It is essential to evaluate how much traffic your API can handle, what level of service you want to provide, and what kind of users you are targeting.

User Behavior: Your API usage limits should be based on user behavior, such as the number of requests, the rate of requests, and the size of the response. You should also consider how frequently users are accessing the API and the impact on the server resources.

Scalability: As your API usage grows, you should ensure that the limits are scalable to accommodate the increasing traffic.

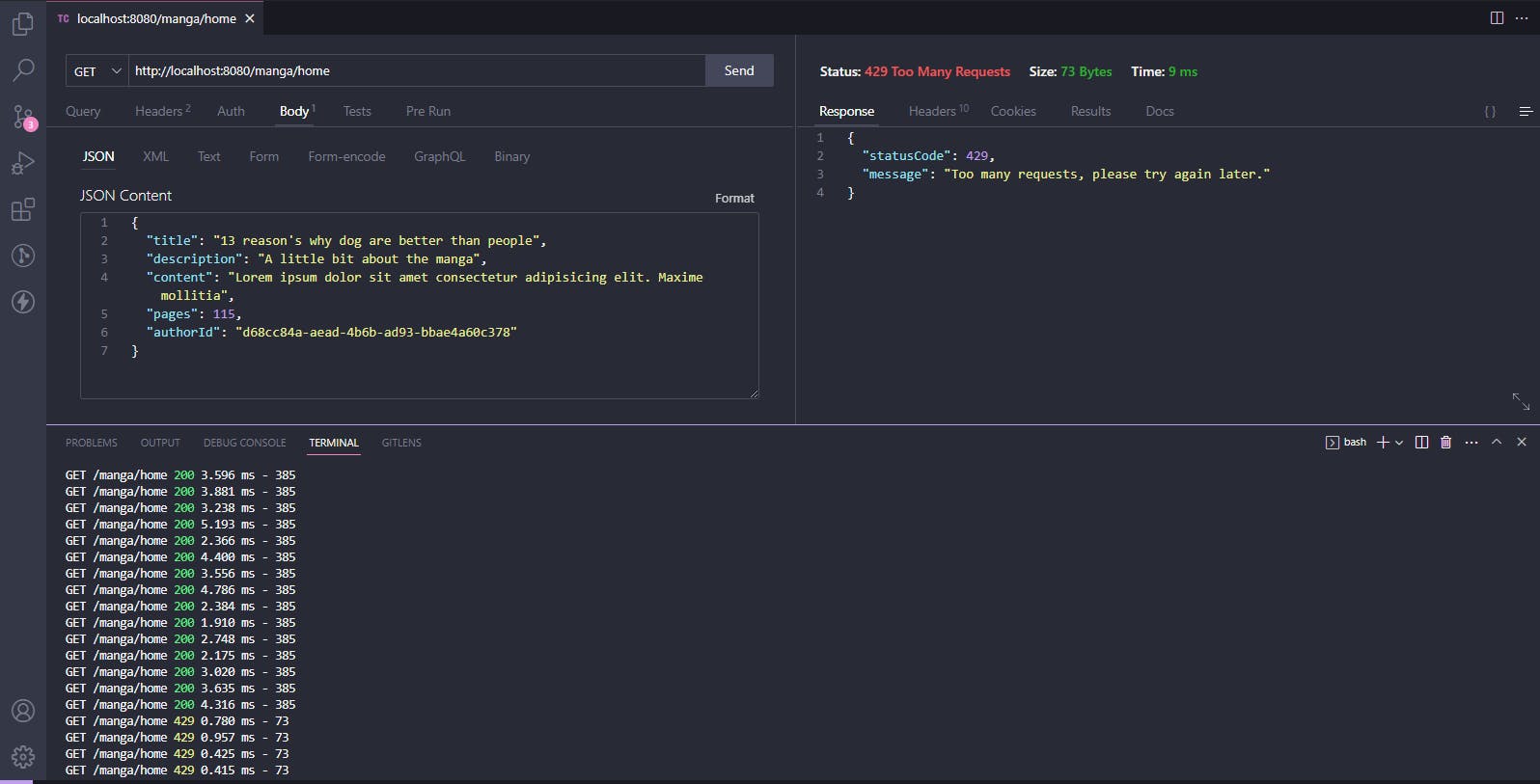

Set clear and informative error messages

When API requests exceed the set limits, it is important to provide clear and informative error messages to users. These messages should inform users of the limit breach and guide how to resolve the issue.

{

"statusCode": 429,

"message": "Too many request, kindly wait 10 minute before sending another request."

}

Allow for flexibility and exceptions

While it is essential to have appropriate limits in place, you should also allow for flexibility and exceptions. For example, you may need to provide higher limits for premium users or allow temporary increases in usage during peak times. You should also have a process in place for handling exceptions and appeals from users who have exceeded the limits.

Implementing rate limiting in a node js application

Have you ever been to a buffet and seen that one person who just keeps piling up their plate with food?

While it might seem tempting to follow in their footsteps, it's not fair to the other people waiting in line. Similarly, in API development, it's important to ensure that one client doesn't hog all the resources and leave others waiting.

That's where rate limiting comes in. By setting a limit on the number of requests a client can make within a certain time frame, we can ensure that everyone gets a fair share of the resources. In our simple CRUD project, we have implemented rate limiting using the express-rate-limit package.

So, just like at a buffet, we encourage everyone to take only what they need and leave some for the other clients. With our simple rate-limiting implementation, we can ensure that everyone gets a fair share of the resources and can enjoy a smooth and efficient experience with our API.

Let’s create a simple CRUD project called “manga-reader” to demonstrate the implementation of rate limiting.

Prerequisites

To follow along with this guide, you will need:

Node.js installed on your machine

A basic understanding of JavaScript and Express.js

Creating the Project

Start by creating a new directory for your project and initializing it with yarn:

mkdir manga-reader

cd manga-reader

yarn init

Install the necessary dependencies:

yarn add express morgan dotenv prisma @prisma/client express-rate-limit

Schema of the Project

The schema for the project defines two models - Author and Manga - with a one-to-many relationship between them. The Manga model contains various attributes such as title, description, and language.

// schema.prisma

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "mysql"

url = env("DATABASE_URL")

}

model Author {

id String @id @unique @default(uuid())

name String

bio String

mangas Manga[]

}

model Manga {

id String @id @unique @default(uuid())

title String

description String

content String

published Boolean @default(false)

pages Int

language Language @default(English)

author Author @relation(fields: [authorId], references: [id])

authorId String

created_on DateTime @default(now())

updated_on DateTime @updatedAt()

}

enum Language {

English

French

Japanese

}

Implementing Rate Limiting

With the express-rate-limit package one can implement rate limiting in our project.

// index.js

import morgan from "morgan";

import express from "express";

import * as dotenv from "dotenv";

import rateLimit from 'express-rate-limit';

import index from "./routes/index.route.js";

dotenv.config()

const app = express();

const apiLimiter = rateLimit({

windowMs: 900000, // 15 minutes

max: 100, // limit each client/ IP to 100 requests per 15 minutes

message: 'Too many requests, please try again later.'

});

app.use(express.json());

app.use(morgan("dev"));

app.use(express.urlencoded({ extended: false}));

app.use(apiLimiter);

// routes

app.use("/manga", apiLimiter, index);

// Home

app.get("/", (_req, res) => {

return res.status(200).json({statusCode: 200, message: `Manga Reader Example API`});

});

// 404 route

app.get("*", (_req, res) => {

return res.status(404).json({statusCode: 404, message: `Route not found`});

});

export default app;

In the code above, a rateLimit middleware instance was created together with the set of windowMs and max options. The windowMs option sets the time interval for which the requests will be counted (in this case, 15 minutes), while max sets the maximum number of requests that can be made within that time interval (in this case, 100).

In this simple application, If a client tries to make more than 100 requests within a 15-minute time frame, they will receive an error message stating that they have reached the limit. This helps to prevent one client from overwhelming the system and ensures that all clients have equal access to the resources. To keep things simple and user-friendly, we can include a clear and concise error message along with a status code to provide more information on the cause of the error. This way, clients can easily understand what went wrong and take the necessary steps to rectify the issue.

Conclusion

Implementing API rate limiting in your Node.js project is an important step toward maintaining the stability and reliability of your application. With the use of packages like express-rate-limit or rate-limiter-flexible, you can easily set limits on requests and prevent abuse of your API by malicious users.

It is important to remember that rate limiting is just one aspect of securing your API. There are other security measures you can implement, such as authentication and authorization, to ensure that only authorized users have access to sensitive data.

So, have fun implementing rate limiting in your Node.js projects, and keep your API secure and stable.